Harnessing the AI Wave: A Creator's Take on OpenAI Dev Day

OpenAI's DevDay keynote felt like a powerful sunrise on a world brimming with possibilities. For developers and creatives alike, the announcements of GPT-4 Turbo mark the beginning of an exhilarating journey into a future where our tools not only und...

OpenAI's DevDay keynote felt like a powerful sunrise on a world brimming with possibilities. For developers and creatives alike, the announcements of GPT-4 Turbo mark the beginning of an exhilarating journey into a future where our tools not only understand us but support our needs, pushing the boundaries of what's possible.

GPT-4 Turbo: A New Ally in the Creative Quest

Imagine an AI so advanced that it not only understands complex instructions but also provides responses with an understanding of the world as it is today. That's GPT-4 Turbo for you—an AI so nuanced and capable that it feels less like a tool and more like a creative partner.

It's about better control and new modalities, like vision and text-to-speech, that open up the canvas of creation in ways we've only dreamed of. And for those who worry about the cost of cutting-edge tech, the new pricing model is a breath of fresh air

OpenAPI introduced updates targeted at improving user and developer experiences, broadening the applicability of OpenAI's models, and addressing feedback from the community.

-

Increased context length: GPT-4 Turbo supports a 128k context window which can fit the equivalent of more than 300 pages of text in a single prompt. GPT-3.5 Turbo supports a 16K context window by default.

-

Updated world knowledge: knowledge of world events up to April 2023.

-

New modalities including vision and text-to-speech: GPT-4 Turbo can accept images as inputs in the Chat Completions API, enabling use cases such as generating captions, analyzing real-world images in detail, and reading documents with figures.

-

Higher rate limits: tokens per minute have doubled for all paying customers.

-

Copyright Shield: OpenAI will now step in and defend customers, paying costs incurred if you face legal claims around copyright infringement. This applies to generally available features of ChatGPT Enterprise and the developer platform.

The Assistants API: Simplicity Meets Capability

Imagine the complexity of building agent-like AI assistants being simplified and significantly reducing the development time and effort required. That's the magic of the new Assistants API. It's a seismic shift in development – a tool that tears down the barriers between complex AI interactions and the creators who dream them up.

The Assistants API features include persistence, retrieval, a Python code interpreter, and improved function calling. It's a leap forward in making agent-like experiences more accessible and efficient to build. OpenAI can now handle a lot of the heavy lifting that developers previously had to DYI to build high-quality AI apps.

OpenAI claims that data and files passed to the OpenAI API are never used to train their models and developers can delete the data when they see fit.

You can try the Assistants API beta without writing any code by heading to the Assistants playground.

Developer-Centric Innovations

Faster and more cost-effective: designed for production-scale applications with high throughput and strict latency requirements. GPT-4 Turbo comes at a 3x cheaper price for input tokens and a 2x cheaper price for output tokens compared to GPT-4.

GPT-4 Turbo is available for all paying developers to try by passing confiuring the API to use gpt-4-1106-preview.

Reproducible outputs and log probabilities: A new seed parameter enables reproducible outputs by making the model return consistent completions most of the time. This beta feature is useful for use cases such as replaying requests for debugging, writing more comprehensive unit tests, and generally having a higher degree of control over the model behavior.

Stateful API & Infinitely long threads: No more wrestling with context windows or key-value stores. The stateful API removes the need to resend entire conversation history for every API call, which simplifies handling of context and message serialization.

Retrieval & Understanding: AI now goes beyond immediate interactions, being able to process images and parse extensive documents. This feature allows integrating information seamlessly into the conversation with as proprietary domain data, product information or documents provided by your users. This means you don’t need to compute and store embeddings for your documents or implement chunking and search algorithms. The Assistants API optimizes retrieval techniques.

Parallel Function Calling: GPT-4 function calling now ensures JSON output without added latency and allows multiple functions to be invoked simultaneously. For example, users can send one message requesting multiple actions, such as “open the car window and turn off the A/C”, which would previously require multiple roundtrips with the model. The new 3.5 Turbo supports JSON mode, and parallel function calling, too.

GPT-4 Turbo with Vision: Developers can access vision in the Chat Completion API by using gpt-4-vision-preview. Pricing depends on the input image size. For instance, passing an image with 1080×1080 pixels to GPT-4 Turbo costs $0.00765. Check out the vision guide.

Integration of DALL•E 3 API: This integration was showcased through the generation of destination illustrations on a travel app, hinting at the seamless fusion of AI-generated media into applications. The throttle is off, friends. With higher rate limits and the DALL·E 3 API, our creative toolkit just expanded exponentially.

Transparency in AI Operations: Developers can now track the AI's thought process, ensuring clarity and confidence in the tools they use.

Code Interpreter in Action: writes and runs Python code in a sandboxed execution environment, and can generate graphs and charts, and process files. It allows your assistants to run code iteratively. Demonstrated by computing a shared expense query, the Code Interpreter can handle on-the-fly computations and is adaptable for more complex tasks such as those in financial or analytical applications.

Direct Interaction with the Internet: The assistants can now connect to the Internet and perform real-world actions like issuing OpenAI credits to selected attendees.

The Magic of Real-Time AI: Paris in a Pin Drop

Romain, head of developer experience at OpenAI, commanded an AI to plan a Paris trip live on stage. It was like watching a magician pulling destinations out of a hat – except the magic was real, and the hat was the new Assistants API. The cheers were well-deserved when that AI dropped pins on a map for top spots in Paris, all in real time. Real-time responses, and intuitive interactions, all with the ease of a casual chat. The demo highlighted integrations with app functions, managed state, knowledge retrieval, and custom actions. The Assistants API promises to facilitate easier access to external actions and knowledge, which could previously require complex and resource-intensive development.

Voice: Your New AI Sidekick

Voice technology took center stage, transforming our concept of AI from text-based to voice-responsive personalities. The demo brought to life an AI that could speak, decide, and act – all through the power of voice. During the demo, Romain commanded his AI to hand out OpenAI credits to attendees. All attendees were offered $500 in OpenAI credits, demonstrating both the API's capability and the company's community engagement. With voice integration, powered by new modalities like Whisper for voice recognition and new SSI voices for speech output, Romain illustrated the potential for hands-free interaction with AI across various applications.

Using natural language to "program" these GPTs could make AI development more accessible to those without traditional coding skills, expanding the number of people who can create and benefit from AI applications.

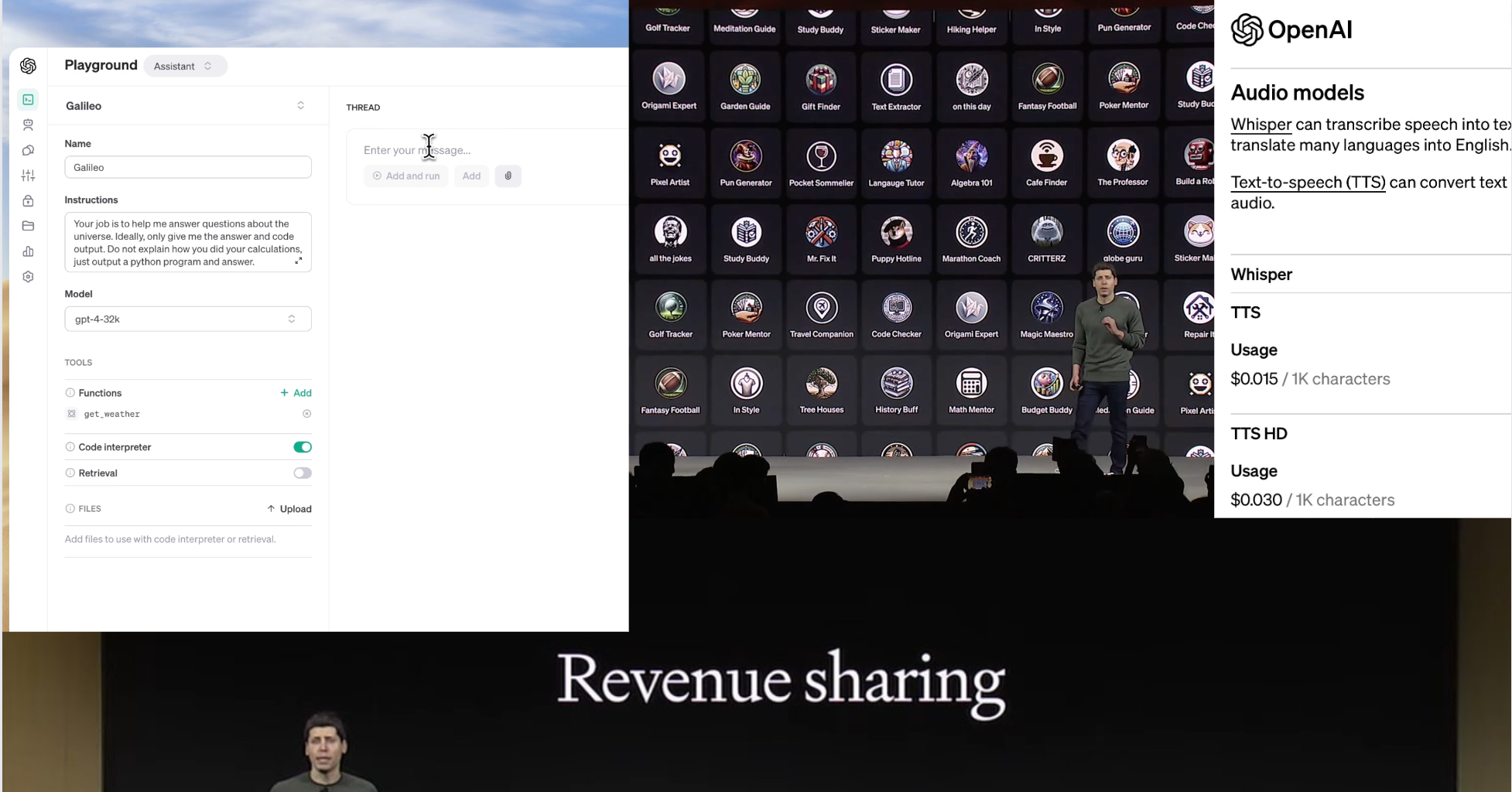

Text-to-speech (TTS) Developers can now generate human-quality speech from text via the text-to-speech API. The TTS model offers six preset voices to choose from and two model variants, tts-1 and tts-1-hd. tts is optimized for real-time use cases and tts-1-hd is optimized for quality. Pricing starts at $0.015 per input 1,000 characters. Check out the TTS guide to get started.

The GPT Store: A Marketplace for Ingenuity

OpenAI has introduced an innovative way to create custom versions of ChatGPT called GPTs. These can be tailored for specific tasks to assist in daily life, work, or at home, and then shared with others. GPTs enable users to learn the rules of board games, teach math, design stickers, and more. They can be created without coding knowledge, for personal use, for a company's internal use, or for everyone. The process is straightforward: start a conversation, give instructions and extra knowledge, and select capabilities like web search, image creation, or data analysis.

GPTs are designed with the community in mind, anticipating that the most incredible versions will come from builders in the community, from educators to hobbyists. OpenAI is rolling out a GPT Store later in November 2023, where verified builders can share their creations. These GPTs will be searchable and may climb leaderboards, with categories like productivity, education, and fun.

In the future, creators will have the opportunity to earn from their GPTs based on usage. This economic model would be revolutionary for AI and empower creators just like Apple empowered them with the Apple App Store. The strategy might evolve, beginning with a straightforward revenue share and possibly moving towards allowing subscriptions to individual GPTs if there's a demand for such a model.

The launch of GPTs comes with a focus on privacy and safety. User interactions with GPTs are not shared with builders, and users have control over third-party API data sharing. Builders can decide if interactions with their GPTs can be used to improve the models. OpenAI has established systems to review GPTs against usage policies, aiming to prevent the sharing of harmful content, and has introduced verification processes for builders' identities.

Developers now can connect GPTs to the real world. By defining custom actions and integrating APIs, GPTs can interact with external data or real-world systems, like databases, emails, or e-commerce platforms. This allows for creative and practical applications, such as integrating a travel listings database or managing e-commerce orders

Superpowers on Demand: The New Creative Paradigm

As the keynote unfolded, it became clear that OpenAI isn't just offering tools; it's offering superpowers on demand. With each demonstration and each revelation, the message was unmistakable: this is the dawn of a new paradigm where creatives and developers alike wield powers once relegated to the realm of the imagination. The future is here, and it's laden with an arsenal of AI capabilities that promise to amplify our creative spirit and technical prowess like never before.